Polarion & AI: Don’t Just Automate - Orchestrate Intelligence

Stop writing detached scripts and start building an intelligent ecosystem. Learn why a Centralized AI Orchestration layer is the key to transforming Polarion from a static tool into a dynamic "Senior Intern" that handles the grunt work while you focus on expert review.

Polarion is renowned for its modularity. Between Java Plugins, Velocity scripting, and rich API access, the platform allows us to inject custom logic into almost any workflow. However, as we integrate Large Language Models (LLMs), the goal shouldn't be to build isolated scripts. Instead, we should aim for a Centralized AI Orchestration layer.

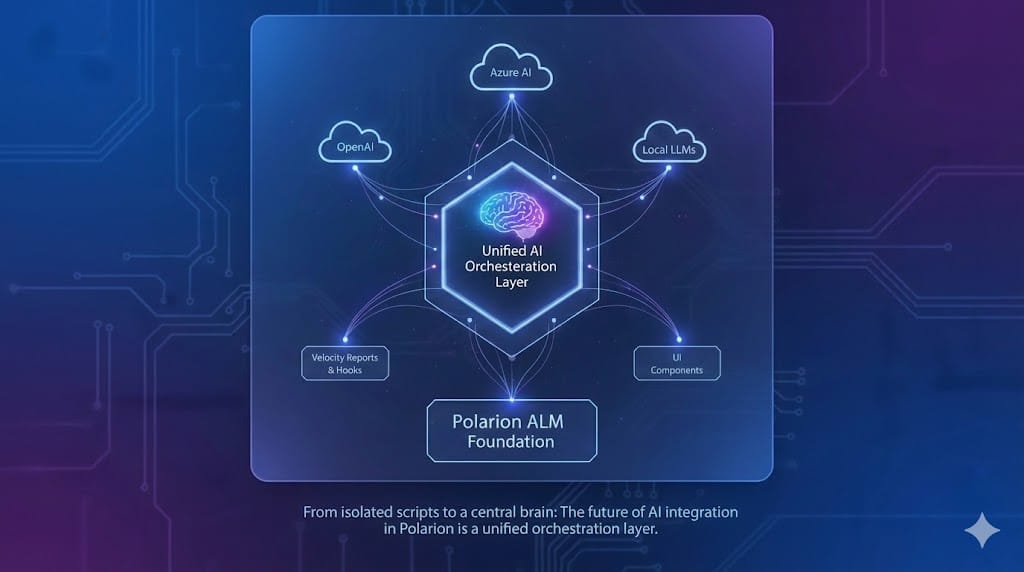

The Architecture: A Unified "AI Brain"

Rather than scattering AI calls across widgets and workflow functions, I am advocating for a Centralized LLM Handler. By building this as a generic Java Plugin, you create a single, secure gateway for your entire environment.

Why this matters to me:

- Generic Integration: You swap providers (Azure, OpenAI, local models) in one place, not in fifty scripts.

- Metadata-Driven: By using JSON Prompt Templates, we can change how the AI "thinks" or behaves without a single server restart.

- True Omnichannel: Once the handler is in place, you can trigger AI logic from anywhere: Velocity-based reports, JavaScript UI components, or Java-based Save Hooks.

The "Senior Intern" Mindset

In my daily work, I don’t view AI as a replacement for engineering judgment. I view it as a highly capable Senior Intern. How many hours do we spend on "grunt work"—copying requirements into test steps or drafting risk summaries? By having the AI provide a 70% draft, we remove the "cold start" problem. Our role shifts from entry to expert review. We provide the professional refinement; the AI provides the initial momentum.

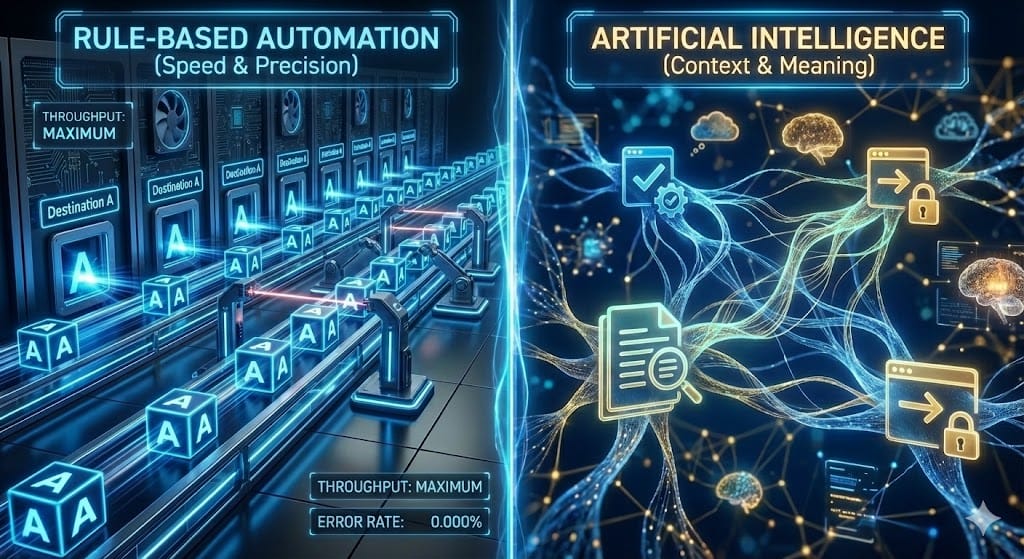

The Boundary: Automation vs. Intelligence

A word of caution: Not everything needs AI.

- Automation is for rules. It's fast, cheap, and binary.

- AI is for context.

If you are just syncing two fields, use a workflow script. If you are checking if a Test Case actually validates a complex requirement—that is where the AI wins.

Building for the Future

The flexibility of Polarion is a gift—but only if managed with a clean architectural intent. By building a centralized, template-driven AI foundation, you ensure that your system remains maintainable, scalable, and ready for whatever the next generation of models brings.

Stop writing detached scripts. Start building an intelligent ecosystem.

The Path Ahead: A Unified AI Platform for Polarion

I am currently evolving my approach into a client-independent AI platform for Polarion. My goal is to move beyond one-off solutions and create a standardized ecosystem that offers:

- Standardized AI Contexts: Pre-configured templates for requirements engineering, risk analysis, and test automation.

- AI Helpers: A library of utility functions to simplify LLM integration.

- Accessibility: Making these tools available to a wider audience in the Polarion community while ensuring long-term "clean-code" stability.

- Integration: Establish generalizability and support for using the platform with any LLM model.

I am still evaluating the final distribution model. My focus remains on providing the best balance of value and scalability.

Let’s talk strategy. If you want to discuss how standardized AI contexts can transform your ALM workflow, reach out. Let’s turn manual tasks into intelligent ecosystems.